What if the future of AI isn’t about scaling up but scaling smart? In the race to build ever-larger language models, a quiet revolution is brewing, and it’s led by small language models (SLMs).

For startup founders, tech professionals, and business decision-makers, the implications are enormous.

Especially in the realm of agentic AI, where systems act autonomously on our behalf, smaller models may offer a faster, cheaper, and more scalable path forward.

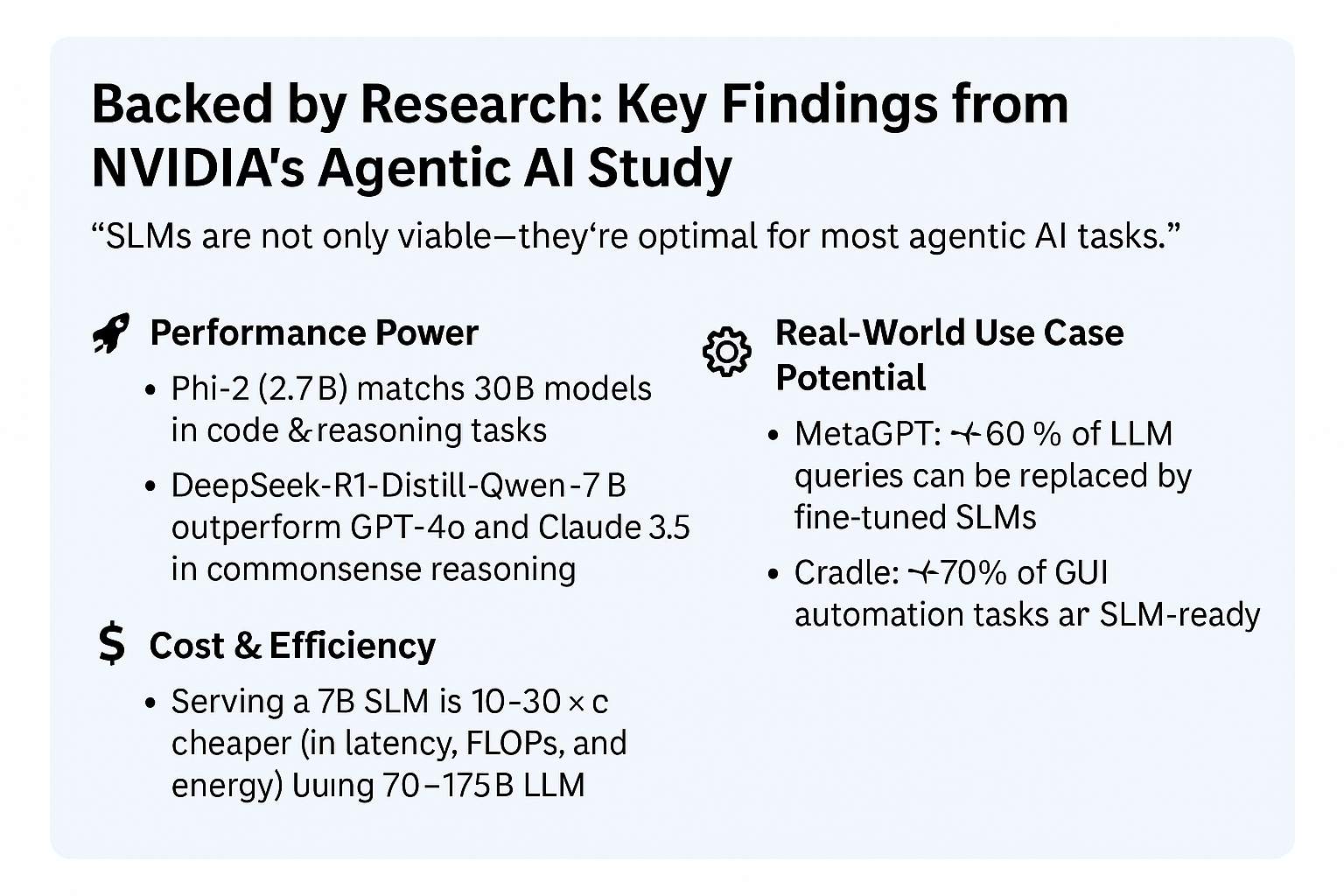

NVIDIA’s latest research, titled “Small Language Models are the Future of Agentic AI,” confirms this shift, revealing that SLMs can outperform larger models in both speed and efficiency, delivering 10–30× faster performance, massively lower costs, and real-time decision-making capabilities ideal for AI agents.

Whether you’re a founder, CTO, or product leader, this is essential reading. We’ve summarized the key insights below. Scroll down to see benchmarks, use cases, and real-world examples.

📌 Prefer the full report? 👉 Download link at the bottom of this post.

Let’s explore why SLMs are poised to power the next era of AI agents.

The Technical Edge: Why Small Models Win

- Low Latency and Real-Time Response: SLMs are compact, making them significantly faster. For agentic systems like robots or embedded assistants that require real-time decisions, a delay of milliseconds can break the experience. Small models deliver snappy performance without relying on cloud APIs.

- Cost and Energy Efficiency: Running a massive model means costly GPUs and energy-intensive compute cycles. SLMs can run on commodity hardware, dramatically lowering both cloud bills and carbon footprints. For startups, this is the difference between experiment and deployment.

- Edge Deployment & Privacy: SLMs are light enough to run locally on mobile devices, IoT systems, or even laptops. That means no need to send data to third-party servers. From healthcare to finance, this keeps sensitive data private and compliant with regulations.

- Agile Fine-Tuning: SLMs are easier and cheaper to customize. You can fine-tune them on proprietary data or task-specific instructions and iterate quickly—a key factor when deploying domain-specific agents.

Read More: How to use AI for smarter lead conversion

Where Small Models Dominate: Use Cases & Domains

- Robotics & Automation: Robots operating in real-world environments need to make split-second decisions. Onboard SLMs help them process data, make decisions, and adapt in real time without the need for cloud latency.

- IoT & Embedded Systems: From smart thermostats to factory sensors, SLMs allow local data processing, anomaly detection, and event-driven actions. The result? Smarter devices with reduced cloud dependency.

- Offline Personal Assistants: Think of dictation tools, translation apps, or to-do list generators that don’t rely on the internet. SLMs can power AI agents that live on your laptop or phone and work even in airplane mode.

- Business AI Agents: Need an AI that summarizes documents, categorizes customer emails, or flags compliance risks? SLMs can be trained on internal data and deployed securely on-premises, unlocking automation without compromising control.

Small vs. Large Language Models: Capability vs. Efficiency

- Strengths of Large Models: – Broad general knowledge – Advanced reasoning and creative generation

- Strengths of Small Models: – Speed and reliability in specific domains – Controllability, less hallucination, easier formatting

- Hybrid Approach: Many companies now deploy a hybrid architecture: SLMs for everyday tasks and LLMs for complex reasoning or fallback. This ensures speed, cost savings, and task optimization.

Comparison Table: SLM vs LLM

| Feature | Small Language Models (SLMs) | Large Language Models (LLMs) |

|---|---|---|

| Latency | ⚡ Milliseconds (real-time) | 🐢 Slower, cloud-dependent |

| Cost to Run | 💸 10–30× cheaper | 💰 High GPU & energy needs |

| Format Control | ✅ Predictable & structured outputs | ⚠️ Higher risk of hallucination |

| General Knowledge | ❌ Task-specific | ✅ Broad open-domain knowledge |

| Edge Deployment | ✅ Runs locally on devices | ❌ Requires powerful servers/cloud |

| Fine-Tuning Flexibility | ✅ Fast, low-resource training | ❌ Complex and costly |

| Parameter Utilization | ✅ More efficient | ❌ Sparse activation |

You May Also Like: Why startups are investing in vertical AI platforms

Trends & Signals: Why the Market Is Moving to SLMs

- Rise of Open-Source SLMs: Microsoft’s Phi-3, Google’s Gemma, Hugging Face’s SmolLMs, and DeepSeek are all delivering high-performance small models to developers. These models are publicly available, modifiable, and community-supported.

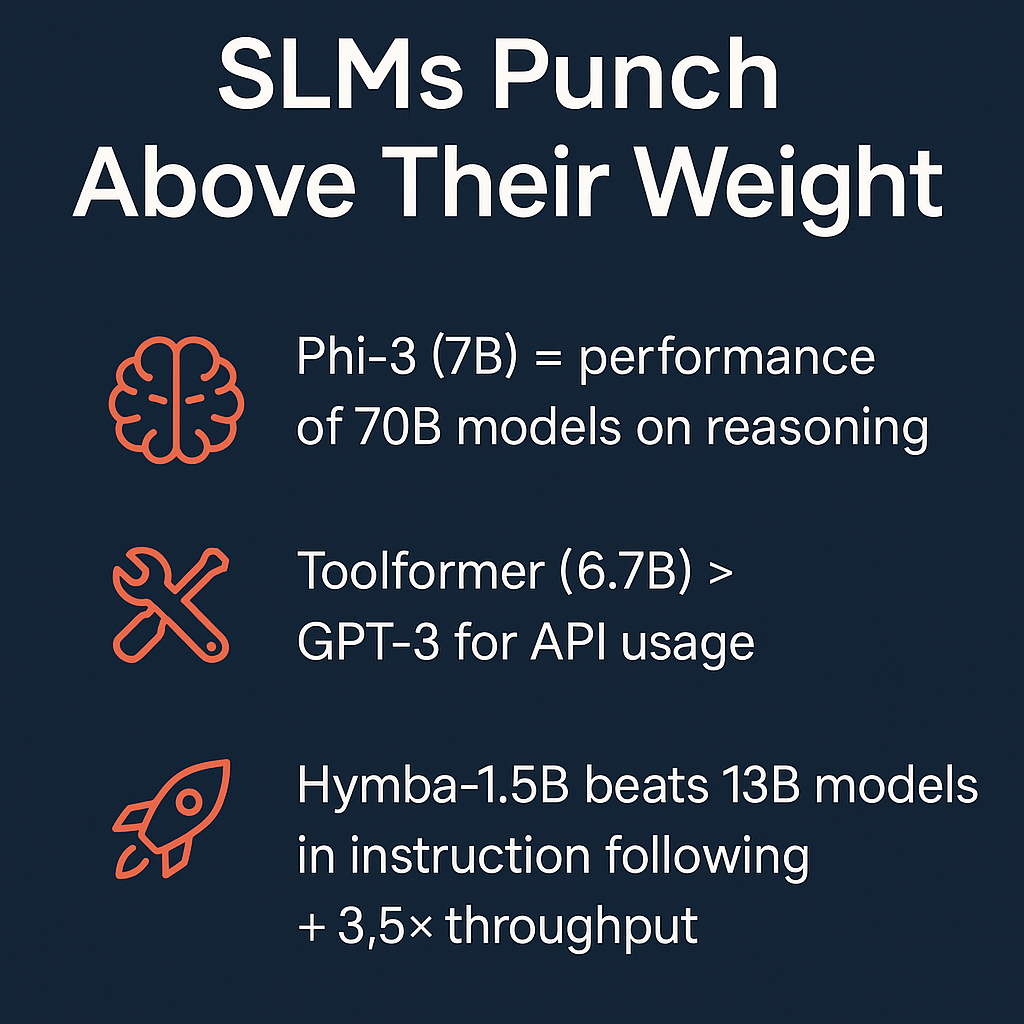

Narrowing the Performance Gap: Thanks to improved training methods, 2B–7B parameter models now rival 30B+ models in performance on key benchmarks.

Narrowing the Performance Gap: Thanks to improved training methods, 2B–7B parameter models now rival 30B+ models in performance on key benchmarks.- Regulation & Privacy: From GDPR to HIPAA, regulations are pushing toward local data processing. SLMs make it easier to keep AI inference on-device and compliant.

- Funding & Startup Momentum: VCs are backing startups building agentic systems with small models. Companies like Arcee, Malted, and FortyTwo are building vertical and domain-specific AI powered by SLMs.

Read More: How to increase ROI with AI sales agents

Challenges and Open Questions

- Hallucination & Context Limitations: Smaller models can still hallucinate and struggle with long documents or complex chains of reasoning. But new architectures and synthetic training data are helping reduce these risks.

- Governance & Safety: With more teams deploying their own models, AI safety and governance must be embedded into development pipelines. Transparency tools and open safety frameworks are emerging as crucial supports.

Real-World Examples

- Sellafield Nuclear Site: A custom small model reduced regulatory document review from weeks to minutes.

- Phi Models by Microsoft: Phi-1 and Phi-3 show strong performance in code and language tasks, outperforming larger models on cost and efficiency.

- Gemma by Google: Open-source, multi-modal, and fast enough to run on personal GPUs or laptops, enabling new applications in edge and desktop AI.

Read More: Hybrid AI agents transforming customer service

Strategic Takeaways for Founders, Builders, and Investors

- SLMs are not a step back—they’re a step toward smarter deployment.

- AI agents don’t need to be omniscient; they need to be reliable and task-focused.

- By investing in SLMs, you can build faster, iterate cheaper, and avoid over-reliance on centralized LLM APIs.

You May Also Like: Why AI Sales Agents Need Strong Documentation To Maximize ROI

What Founders & Tech Teams Should Do Now

- 🧪 Test open SLMs: Phi-3, Gemma, Hymba

- 🔍 Identify repetitive agentic tasks in your system

- 🔧 Fine-tune an SLM using agent logs

- 🔀 Consider hybrid systems: SLM by default, LLM on fallback

Final Word: The Era of Ubiquitous Mini-AI Agents

From smartwatches to factory lines, we’re entering an era where small AI agents operate everywhere.

Just like microservices reshaped cloud software, micro-models are reshaping how intelligence is deployed.

Founders who embrace this shift early will enjoy speed advantages, cost leadership, and the ability to embed AI into products and workflows at scale.

Big isn’t going away. But in the world of agentic AI, small is the new smart.

Want the Full Report?

Get the original NVIDIA research that’s reshaping how startups and enterprises think about AI agents.

🧠 “Why Small Language Models Are the Future of Agentic AI”

Learn how SLMs outperform larger models in real-world applications—faster, cheaper, and more controllable.

👉 Download the Full NVIDIA Research PDF Now

(Perfect for founders, builders, and AI product teams exploring next-gen agentic systems.)

The Team Compare BizTech is made up of people from marketing backgrounds, digital marketing & content marketing backgrounds, each with unique experiences and nuggets of wisdom to share with you. The team is passionate about creating unique, accurate, and engaging content.